Automated Reasoning checks, multi-agent

collaboration, and Model Distillation build on the strong

foundation of enterprise-grade capabilities available on Amazon

Bedrock to help customers go from proof of concept to

production-ready generative AI faster

At AWS re:Invent, Amazon Web Services, Inc. (AWS), an

Amazon.com, Inc. company (NASDAQ: AMZN), today announced new

capabilities for Amazon Bedrock, a fully managed service for

building and scaling generative artificial intelligence (AI)

applications with high-performing foundation models. Today’s

announcements help customers prevent factual errors due to

hallucinations, orchestrate multiple AI-powered agents for complex

tasks, and create smaller, task-specific models that can perform

similarly to a large model at a fraction of the cost and

latency.

This press release features multimedia. View

the full release here:

https://www.businesswire.com/news/home/20241203470182/en/

- Automated Reasoning checks is the first and only generative AI

safeguard that helps prevent factual errors due to model

hallucinations, opening up new generative AI use cases that demand

the highest levels of precision.

- Customers can use multi-agent collaboration to easily build and

orchestrate multiple AI agents to solve problems together,

expanding the ways customers can apply generative AI to address

their most complex use cases.

- Model Distillation empowers customers to transfer specific

knowledge from a large, highly capable model to a smaller, more

efficient one that can be up to 500% faster and 75% less expensive

to run.

- Tens of thousands of customers use Amazon Bedrock today, with

Moody’s, PwC, and Robin AI among those leveraging these new

capabilities to cost-effectively scale inference and push the

limits of generative AI innovation.

“With a broad selection of models, leading capabilities that

make it easier for developers to incorporate generative AI into

their applications, and a commitment to security and privacy,

Amazon Bedrock has become essential for customers who want to make

generative AI a core part of their applications and businesses,”

said Dr. Swami Sivasubramanian, vice president of AI and Data at

AWS. “That is why we have seen Amazon Bedrock grow its customer

base by 4.7x in the last year alone. Over time, as generative AI

transforms more companies and customer experiences, inference will

become a core part of every application. With the launch of these

new capabilities, we are innovating on behalf of customers to solve

some of the top challenges, like hallucinations and cost, that the

entire industry is facing when moving generative AI applications to

production.”

Automated Reasoning checks prevent factual errors due to

hallucinations

While models continue to advance, even the most capable ones can

hallucinate, providing incorrect or misleading responses.

Hallucinations remain a fundamental challenge across the industry,

limiting the trust companies can place in generative AI. This is

especially true for regulated industries, like healthcare,

financial services, and government agencies, where accuracy is

critical, and organizations need to audit to make sure models are

responding appropriately. Automated Reasoning checks is the first

and only generative AI safeguard that helps prevent factual errors

due to hallucinations using logically accurate and verifiable

reasoning. By increasing the trust that customers can place in

model responses, Automated Reasoning checks opens generative AI up

to new use cases where accuracy is paramount.

Automated reasoning is a branch of AI that uses math to prove

something is correct. It excels when dealing with problems where

users need precise answers to a topic that is large and complex,

and that has a well-defined set of rules or collection of knowledge

about the subject. AWS has a team of world-class automated

reasoning experts who have used this technology over the last

decade to improve experiences across AWS, like proving that

permissions and access controls are implemented correctly to

enhance security or checking millions of scenarios across Amazon

Simple Storage Service (S3) before deployment to ensure

availability and durability remain protected.

Amazon Bedrock Guardrails makes it easy for customers to apply

safety and responsible AI checks to generative AI applications,

allowing customers to guide models to only talk about relevant

topics. Accessible through Amazon Bedrock Guardrails, Automated

Reasoning checks now allows Amazon Bedrock to validate factual

responses for accuracy, produce auditable outputs, and show

customers exactly why a model arrived at an outcome. This increases

transparency and ensures that model responses are in line with a

customer’s rules and policies. For example, a health insurance

provider that needs to ensure its generative AI-powered customer

service application responds correctly to customer questions about

policies could benefit from Automated Reasoning checks. To apply

them, the provider uploads their policy information, and Amazon

Bedrock automatically develops the necessary rules, guiding the

customers to iteratively test it to ensure the model is tuned to

the right response—no automated reasoning expertise required. The

insurance provider then applies the check, and as the model

generates responses, Amazon Bedrock verifies them. If a response is

incorrect, like getting the deductible wrong or flagging a

procedure that is not covered, Amazon Bedrock suggests the correct

response using information from the Automated Reasoning check.

PwC, a global professional services firm, is using Automated

Reasoning checks to create AI assistants and agents that are highly

accurate, trustworthy, and useful to drive its clients’ businesses

to the leading edge. PwC incorporates Automated Reasoning checks

into industry-specific solutions for clients in financial services,

healthcare, and life sciences, including applications that verify

AI-generated compliance content with Food and Drug Administration

(FDA) and other regulatory standards. Internally, PwC employs

Automated Reasoning checks to ensure that responses generated by

generative AI assistants and agents are accurate and compliant with

internal policies.

Easily build and coordinate multiple agents to execute

complex workflows

As companies make generative AI a core part of their

applications, they want to do more than just summarize content and

power chat experiences. They also want their applications to take

action. AI-powered agents can help customers’ applications

accomplish these actions by using a model’s reasoning capabilities

to break down a task, like helping with an order return or

analyzing customer retention data, into a series of steps that the

model can execute. Amazon Bedrock Agents makes it easy for

customers to build these agents to work across a company’s systems

and data sources. While a single agent can be useful, more complex

tasks, like performing financial analysis across hundreds or

thousands of different variables, may require a large number of

agents with their own specializations. However, creating a system

that can coordinate multiple agents, share context across them, and

dynamically route different tasks to the right agent requires

specialized tools and generative AI expertise that many companies

do not have available. That is why AWS is expanding Amazon Bedrock

Agents to support multi-agent collaboration, empowering customers

to easily build and coordinate specialized agents to execute

complex workflows.

Using multi-agent collaboration in Amazon Bedrock, customers can

get more accurate results by creating and assigning specialized

agents for specific steps of a project and accelerate tasks by

orchestrating multiple agents working in parallel. For example, a

financial institution could use Amazon Bedrock Agents to help carry

out due diligence on a company before investing. First, the

customer uses Amazon Bedrock Agents to create a series of

specialized agents focused on specific tasks, like analyzing global

economic factors, assessing industry trends, and reviewing the

company’s historical financials. After they have created all of

their specialized agents, they create a supervisor agent to manage

the project. The supervisor then handles the coordination, like

breaking up and routing tasks to the right agents, giving specific

agents access to the information they need to complete their work,

and determining what actions can be processed in parallel and which

need details from other tasks before the agent can move forward.

Once all of the specialized agents complete their inputs, the

supervisor agent pulls the information together, synthesizes the

results, and develops an overall risk profile.

Moody’s, a global leader in credit ratings and financial

insights, has chosen Amazon Bedrock multi-agent collaboration to

enhance its risk analysis workflows. Moody's is leveraging Amazon

Bedrock to create agents that are each assigned a specific task and

given access to tailored datasets to perform its role. For example,

one agent might analyze macroeconomic trends, while another

evaluates company-specific risks using proprietary financial data,

and a third benchmarks competitive positioning. These agents

collaborate seamlessly, synthesizing their outputs into precise,

actionable insights. This innovative approach enables Moody’s to

deliver faster, more accurate risk assessments, solidifying its

reputation as a trusted authority in financial decision-making.

Create smaller, faster, more cost-effective models with Model

Distillation

Customers today are experimenting with a wide variety of models

to find the one best suited to the unique needs of their business.

However, even with all the models available today, it is

challenging to find one with the right mix of specific knowledge,

cost, and latency. Larger models are more knowledgeable, but they

take longer to respond and cost more, while small models are faster

and cheaper to run, but are not as capable. Model distillation is a

technique that transfers the knowledge from a large model to a

small model, while retaining the small model’s performance

characteristics. However, doing this requires specialized machine

learning (ML) expertise to work with training data, manually

fine-tune the model, and adjust model weights without compromising

the performance characteristics that led the customer to choose the

smaller model in the first place. With Amazon Bedrock Model

Distillation, any customer can now distill their own model that can

be up to 500% faster and 75% less expensive to run than original

models, with less than 2% accuracy loss for use cases like

retrieval augmented generation (RAG). Now, customers can optimize

to achieve the best combination of capabilities, accuracy, latency,

and cost for their use case—no ML expertise required.

With Amazon Bedrock Model Distillation, customers simply select

the best model for a given use case and a smaller model from the

same model family that delivers the latency their application

requires at the right cost. After the customer provides sample

prompts, Amazon Bedrock will do all the work to generate responses

and fine-tune the smaller model, and it can even create more sample

data, if needed, to complete the distillation process. This gives

customers a model with the relevant knowledge and accuracy of the

large model, but the speed and cost of the smaller model, making it

ideal for production use cases, like real-time chat interactions.

Model Distillation works with models from Anthropic, Meta, and the

newly announced Amazon Nova Models.

Robin AI, which provides an AI-powered assistant that helps make

complex legal processes quicker, cheaper, and more accessible, is

using Model Distillation to help power high-quality legal Q&A

across millions of contract clauses. Model Distillation helps Robin

AI get the accuracy they need at a fraction of the cost, while

faster responses provide a more fluid interaction between their

customers and the assistant.

Automated Reasoning checks, multi-agent collaboration, and Model

Distillation are all available in preview.

To learn more, visit:

- The AWS Blog for details on today’s announcements: Automated

Reasoning checks, multi-agent collaboration, and Model

Distillation.

- The Amazon Bedrock page to learn more about the

capabilities.

- The Amazon Bedrock customer page to learn how companies are

using Amazon Bedrock.

- The AWS re:Invent page for more details on everything happening

at AWS re:Invent.

About Amazon Web Services

Since 2006, Amazon Web Services has been the world’s most

comprehensive and broadly adopted cloud. AWS has been continually

expanding its services to support virtually any workload, and it

now has more than 240 fully featured services for compute, storage,

databases, networking, analytics, machine learning and artificial

intelligence (AI), Internet of Things (IoT), mobile, security,

hybrid, media, and application development, deployment, and

management from 108 Availability Zones within 34 geographic

regions, with announced plans for 18 more Availability Zones and

six more AWS Regions in Mexico, New Zealand, the Kingdom of Saudi

Arabia, Taiwan, Thailand, and the AWS European Sovereign Cloud.

Millions of customers—including the fastest-growing startups,

largest enterprises, and leading government agencies—trust AWS to

power their infrastructure, become more agile, and lower costs. To

learn more about AWS, visit aws.amazon.com.

About Amazon

Amazon is guided by four principles: customer obsession rather

than competitor focus, passion for invention, commitment to

operational excellence, and long-term thinking. Amazon strives to

be Earth’s Most Customer-Centric Company, Earth’s Best Employer,

and Earth’s Safest Place to Work. Customer reviews, 1-Click

shopping, personalized recommendations, Prime, Fulfillment by

Amazon, AWS, Kindle Direct Publishing, Kindle, Career Choice, Fire

tablets, Fire TV, Amazon Echo, Alexa, Just Walk Out technology,

Amazon Studios, and The Climate Pledge are some of the things

pioneered by Amazon. For more information, visit amazon.com/about

and follow @AmazonNews.

View source

version on businesswire.com: https://www.businesswire.com/news/home/20241203470182/en/

Amazon.com, Inc. Media Hotline Amazon-pr@amazon.com

www.amazon.com/pr

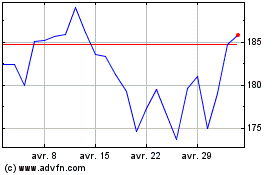

Amazon.com (NASDAQ:AMZN)

Graphique Historique de l'Action

De Nov 2024 à Déc 2024

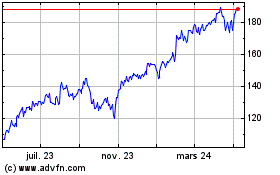

Amazon.com (NASDAQ:AMZN)

Graphique Historique de l'Action

De Déc 2023 à Déc 2024