- Mistral AI’s newest and most powerful model, Mistral Large, is

available in the Snowflake Data Cloud for customers to securely

harness generative AI with their enterprise data

- Snowflake Ventures partners with Mistral AI to expand

generative AI capabilities and empower more developers to

seamlessly tap into the power of leading large language models

- Snowflake Cortex LLM Functions, now in public preview, enables

users to quickly, easily, and securely build generative AI

apps

Snowflake (NYSE: SNOW), the Data Cloud company, and Mistral AI,

one of Europe’s leading providers of AI solutions, today announced

a global partnership to bring Mistral AI’s most powerful language

models directly to Snowflake customers in the Data Cloud. Through

this multi-year partnership, which includes a parallel investment

in Mistral’s Series A from Snowflake Ventures, Mistral AI and

Snowflake will deliver the capabilities enterprises need to

seamlessly tap into the power of large language models (LLMs),

while maintaining security, privacy, and governance over their most

valuable asset — their data.

This press release features multimedia. View

the full release here:

https://www.businesswire.com/news/home/20240305158817/en/

Snowflake Partners with Mistral AI to

Bring Industry-Leading Language Models to Enterprises Through

Snowflake Cortex (Graphic: Business Wire)

With the new Mistral AI partnership, Snowflake customers gain

access to Mistral AI’s newest and most powerful LLM, Mistral Large,

with benchmarks making it one of the world’s top-performing models.

Beyond the benchmarks, Mistral AI’s new flagship model has unique

reasoning capacities, is proficient in code and mathematics, and is

fluent in five languages — French, English, German, Spanish and

Italian — in line with Mistral AI’s commitment to promoting

cultural and linguistic specificities of generative AI technology.

It can also process hundreds of pages of documents in a single

call. In addition, Snowflake customers gain access to Mixtral 8x7B,

Mistral AI’s open source model that exceeds OpenAI’s GPT3.5 in

speed and quality on most benchmarks, alongside Mistral 7B, Mistral

AI’s first foundation model optimized for low latency with a low

memory requirement and high throughput for its size. Mistral AI’s

models are now available to customers in public preview as a part

of Snowflake Cortex, Snowflake’s fully managed LLM and vector

search service that enables organizations to accelerate analytics

and quickly build AI apps securely with their enterprise data.

“By partnering with Mistral AI, Snowflake is putting one of the

most powerful LLMs on the market directly in the hands of our

customers, empowering every user to build cutting-edge, AI-powered

apps with simplicity and scale,” said Sridhar Ramaswamy, CEO of

Snowflake. “With Snowflake as the trusted data foundation, we’re

transforming how enterprises harness the power of LLMs through

Snowflake Cortex so they can cost-effectively address new AI use

cases within the security and privacy boundaries of the Data

Cloud.”

“Snowflake’s commitments to security, privacy, and governance

align with Mistral AI’s ambition to put frontier AI in everyone’s

hand and to be accessible everywhere. Mistral AI shares Snowflake’s

values for developing efficient, helpful, and trustworthy AI models

that advance how organizations around the world tap into generative

AI,” said Arthur Mensch, CEO and co-founder of Mistral AI. “With

our models available in the Snowflake Data Cloud, we are able to

further democratize AI so users can create more sophisticated AI

apps that drive value at a global scale.”

Snowflake Cortex first announced support for industry-leading

LLMs for specialized tasks such as sentiment analysis, translation,

and summarization, alongside foundation LLMs — starting with Meta

AI’s Llama 2 model — for use cases including retrieval-augmented

generation (RAG) at Snowday 2023. Snowflake is continuing to invest

in its generative AI efforts by partnering with Mistral AI and

advancing the suite of foundation LLMs in Snowflake Cortex,

providing organizations with an easy path to bring state-of-the-art

generative AI to every part of their business. To deliver a

serverless experience that makes AI accessible to a broad set of

users, Snowflake Cortex eliminates the long-cycled procurement and

complex management of GPU infrastructure by partnering with NVIDIA

to deliver a full stack accelerated computing platform that

leverages NVIDIA Triton Inference Server among other tools.

With Snowflake Cortex LLM Functions now in public preview,

Snowflake users can leverage AI with their enterprise data to

support a wide range of use cases. Using specialized functions, any

user with SQL skills can leverage smaller LLMs to cost-effectively

address specific tasks such as sentiment analysis, translation, and

summarization in seconds. For more complex use cases, Python

developers can go from concept to full-stack AI apps such as

chatbots in minutes, combining the power of foundation LLMs —

including Mistral AI’s LLMs in Snowflake Cortex — with chat

elements, in public preview soon, within Streamlit in Snowflake.

This streamlined experience also holds true for RAG with

Snowflake’s integrated vector functions and vector data types, both

in public preview soon, while ensuring the data never leaves

Snowflake’s security and governance perimeter.

Snowflake is committed to furthering AI innovation not just for

its customers and the Data Cloud ecosystem, but the wider

technology community. As a result, Snowflake recently joined the AI

Alliance, an international community of developers, researchers,

and organizations dedicated to promoting open, safe, and

responsible AI. Through the AI Alliance, Snowflake will continue to

comprehensively and openly address both the challenges and

opportunities of generative AI in order to further democratize its

benefits.

Learn More:

- Register for Snowflake Data Cloud Summit 2024, June 3-6, 2024

in San Francisco, to get the latest on Snowflake’s AI innovations

and upcoming announcements, here.

- Learn how organizations are bringing generative AI and LLMs to

their enterprise data in this video.

- Dig into how all users can harness the power of generative AI

and LLMs within seconds through Snowflake Cortex.

- Stay on top of the latest news and announcements from Snowflake

on LinkedIn and Twitter.

Forward Looking Statements

This press release contains express and implied forward-looking

statements, including statements regarding (i) Snowflake’s business

strategy, (ii) Snowflake’s products, services, and technology

offerings, including those that are under development or not

generally available, (iii) market growth, trends, and competitive

considerations, and (iv) the integration, interoperability, and

availability of Snowflake’s products with and on third-party

platforms. These forward-looking statements are subject to a number

of risks, uncertainties and assumptions, including those described

under the heading “Risk Factors” and elsewhere in the Quarterly

Reports on Form 10-Q and the Annual Reports on Form 10-K that

Snowflake files with the Securities and Exchange Commission. In

light of these risks, uncertainties, and assumptions, actual

results could differ materially and adversely from those

anticipated or implied in the forward-looking statements. These

statements speak only as of the date the statements are first made

and are based on information available to us at the time those

statements are made and/or management's good faith belief as of

that time. Except as required by law, Snowflake undertakes no

obligation, and does not intend, to update the statements in this

press release. As a result, you should not rely on any

forward-looking statements as predictions of future events.

Any future product information in this press release is intended

to outline general product direction. This information is not a

commitment, promise, or legal obligation for us to deliver any

future products, features, or functionality; and is not intended to

be, and shall not be deemed to be, incorporated into any contract.

The actual timing of any product, feature, or functionality that is

ultimately made available may be different from what is presented

in this press release.

© 2024 Snowflake Inc. All rights reserved. Snowflake, the

Snowflake logo, and all other Snowflake product, feature and

service names mentioned herein are registered trademarks or

trademarks of Snowflake Inc. in the United States and other

countries. All other brand names or logos mentioned or used herein

are for identification purposes only and may be the trademarks of

their respective holder(s). Snowflake may not be associated with,

or be sponsored or endorsed by, any such holder(s).

About Snowflake

Snowflake enables every organization to mobilize their data with

Snowflake’s Data Cloud. Customers use the Data Cloud to unite

siloed data, discover and securely share data, power data

applications, and execute diverse AI/ML and analytic workloads.

Wherever data or users live, Snowflake delivers a single data

experience that spans multiple clouds and geographies. Thousands of

customers across many industries, including 691 of the 2023 Forbes

Global 2000 (G2K) as of January 31, 2024, use Snowflake Data Cloud

to power their businesses. Learn more at snowflake.com.

View source

version on businesswire.com: https://www.businesswire.com/news/home/20240305158817/en/

For Snowflake Kaitlyn

Hopkins Senior Product PR Lead, Snowflake press@snowflake.com

For Mistral AI Alexandra van

Weddingen Alva Conseil avanweddingen@alvaconseil.com

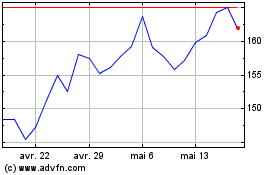

Snowflake (NYSE:SNOW)

Graphique Historique de l'Action

De Mar 2024 à Avr 2024

Snowflake (NYSE:SNOW)

Graphique Historique de l'Action

De Avr 2023 à Avr 2024